Words inherently carry meanings and have relationships with other words. For instance, the word cat has a closer relationship to words like kitten and pet than it does to unrelated words like car or apple.

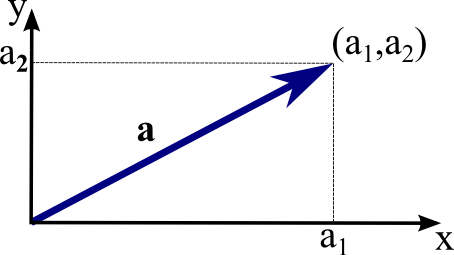

Word embeddings are a technique to capture these relationships by converting words into mathematical representations, specifically vectors.

While we often visualize these vectors in two dimensions for simplicity, in reality, they can have many more dimensions. A 300-dimensional vector is common in many word embedding techniques.

Words that share similar meanings or contexts will have vectors that are closer together in this multi-dimensional space. Think of these vectors as hyper-dimensional numbers stored in matrix form.